Implementing and understanding actor-critic algorithms

Overview

Actor-critic methods combine the benefits of policy gradient methods (the actor) with value function approximation (the critic). The actor learns a policy, while the critic evaluates states to reduce variance in policy gradient estimates.

The Algorithm

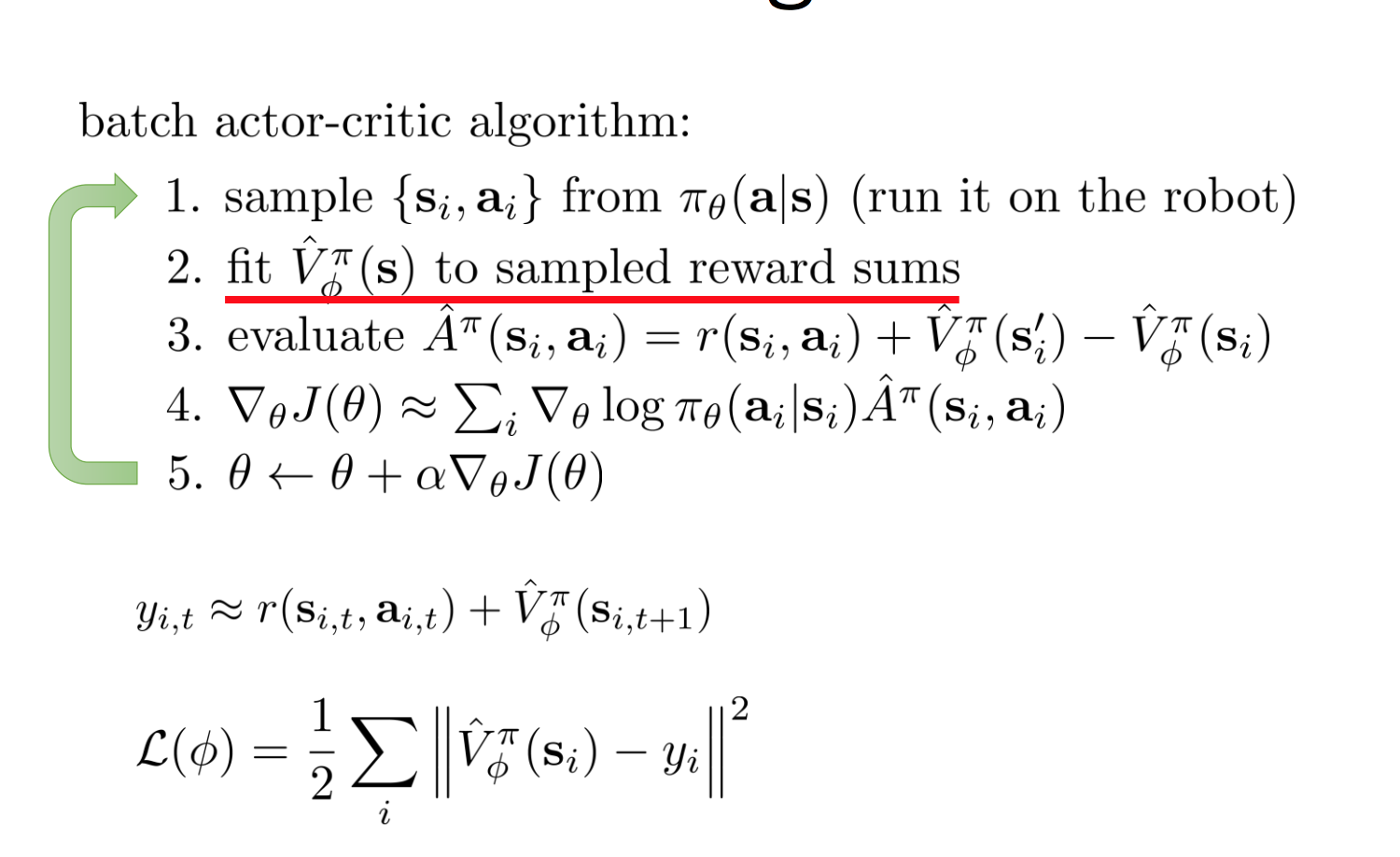

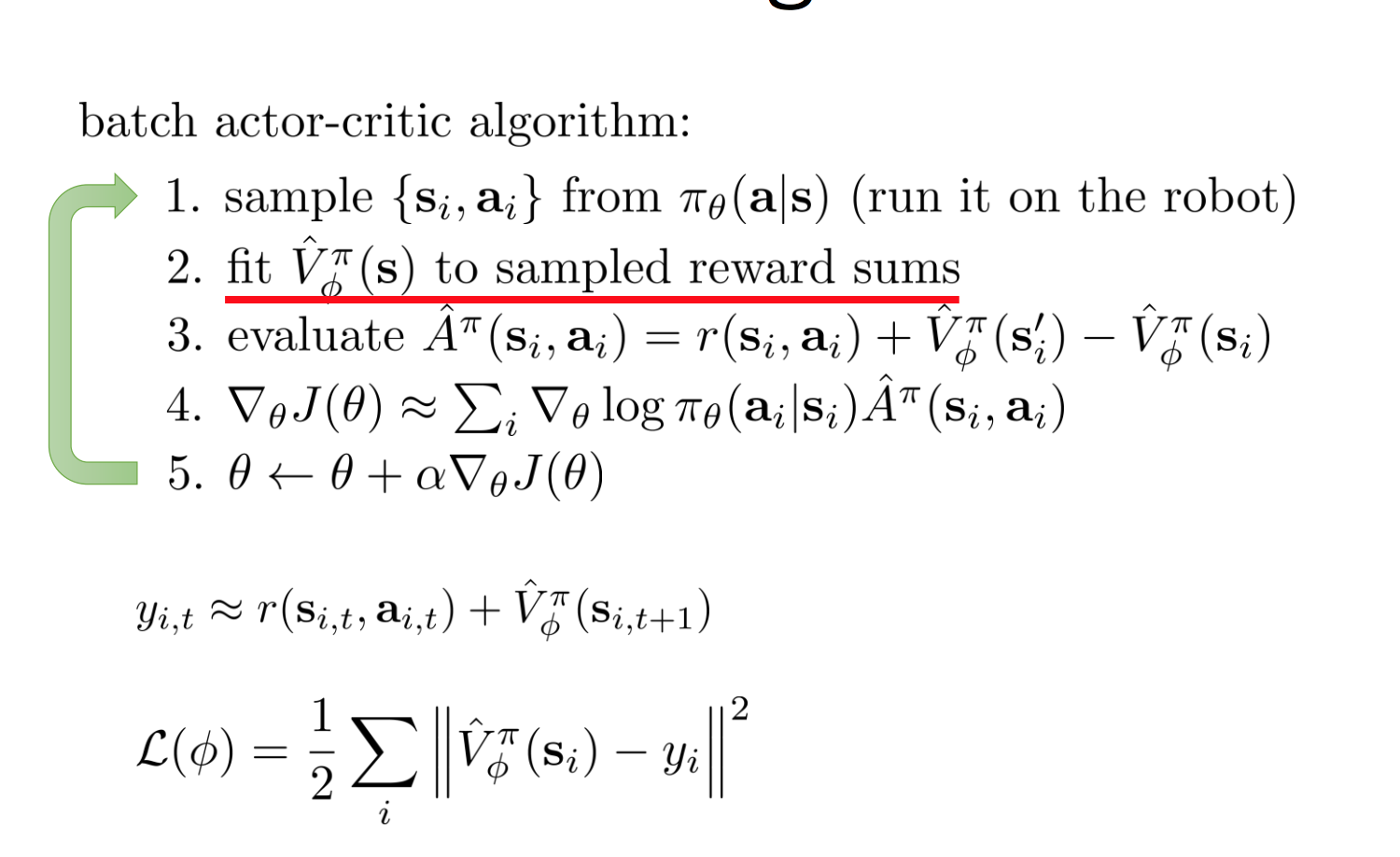

Following Sergey Levine's lecture, the batch actor-critic algorithm works as follows:

Key Components

Actor: The policy network \(\pi_\theta(a|s)\) that maps states to action distributions.

Critic: The value network \(V_\phi(s)\) that estimates expected returns from a state.

Advantage: The advantage function \(A(s,a) = Q(s,a) - V(s)\) tells us how much better an action is compared to the average.

Why Actor-Critic?

- Lower variance: Using a learned baseline (critic) reduces variance compared to REINFORCE

- Online learning: Can update after each step, not just at episode end

- Continuous actions: Works well with continuous action spaces

Implementation: actorCritic.py